Software development is not as simple as it used to be. Today, almost every software is equipped with top-notch technologies and advanced features that make it complex. This makes code security quite challenging for developers who rely on traditional testing tools or manual reviews to find software vulnerabilities, especially those like “Zero Days”, which can find secret, almost undetectable spots in software code.

Here is when AI agents in code security come into action. These agents can autonomously identify and even fix code security flaws at a speed that human developers couldn’t match. The question is, how does an AI agent do this?

Since this is a relatively new use case of AI agents, most would also like to know the benefits, types, technical architecture, real-world examples, and other critical aspects of AI agents for code security. That’s what this blog is about. So, without further ado, let’s get started.

What are AI Agents for Code Security

AI agents are autonomous software systems that don’t require any manual intervention to find, review, and fix vulnerabilities that occur during software lifecycle development. Traditional tools, even the automated ones, do this automatically, but they cannot learn on their own from patterns.

The self-learning capability enables the agents to learn patterns from codebases, security advisories, and past incidents. The agents become capable of detecting even subtle flaws in code logic and insecure dependencies. They can even mark unusual code behaviors. Now imagine how much time manual resources will take to do all this.

This was about identifying code; an AI code security agent can even recommend fixes. It can even rewrite insecure code blocks, considering compliance standards. The agent can integrate seamlessly with IDEs (Integrated Development Environments), CI/CD pipelines, and code repositories to ensure code resilience.

- Autonomous: AI agents that safeguard code operate independently.

- Proactive: Code security AI agents can predict and prevent vulnerabilities for proactive decision-making.

- Adaptive: Intelligent code security agents automatically evolve with every interaction.

How an AI Agent Works to Ensure Code Security

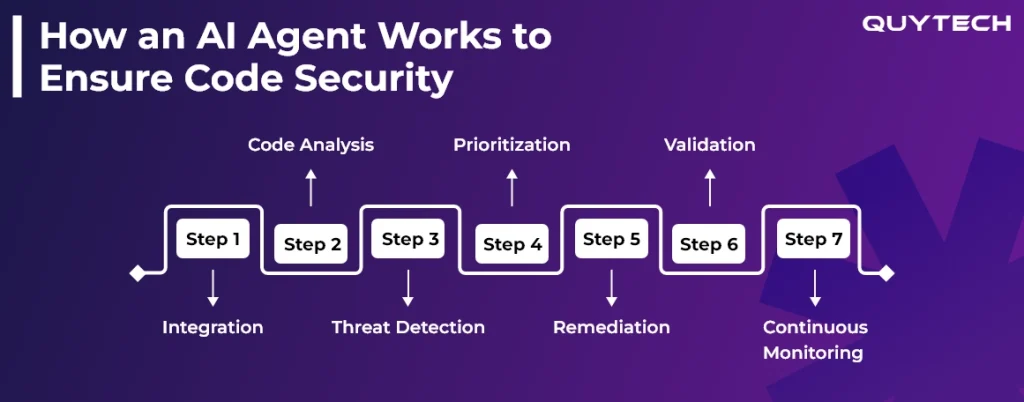

Smart agents for code security follow a stepwise approach to perform their designated functions or tasks.

Step 1: Integration: The agent connects with IDEs, CI/CD pipelines, and code repositories.

Step 2: Code Analysis: It performs static and dynamic analysis to scan and assess code.

Step 3: Threat Detection: Based on the analysis, smart agents identify risky patterns and other code vulnerabilities and misconfigurations.

Step 4: Prioritization: Considering the severity and impact of the issues, the agent prioritizes and ranks them.

Step 5: Remediation: It recommends or automatically fixes the issues or code patches.

Step 6: Validation: Automatically runs tests to make sure fixing issues doesn’t hamper the functionality of the code.

Step 7: Continuous Monitoring: The agent learns automatically from developers’ feedback and continuously watches the code for new vulnerabilities.

Critical Insights: AI Agents for Autonomous Data Analysis

Why Traditional Code Security Tools No Longer Work

As mentioned earlier that increased software development complexity is difficult to handle with conventional code security practices such as static application security testing (SAST) and dependency scanners. Below are the reasons why:

- Reactive, Not Preventive

Traditional tools can identify vulnerabilities after the completion of coding. They offer a reactive approach, which may incur additional cost and increase time-to-market.

- High False Positive

Dependency scanners may generate high false positives or generate an alert that is not relevant. This can overwhelm developers in identifying critical issues.

- Lack of Contextual Understanding

Conventional tools refer to predefined rules and patterns. They can’t understand the intent or context of the code. This may lead to missing complex logic issues.

- Poor Integration with Modern Workflows

Old testing tools work independently; it is difficult to integrate them with CI/CD pipelines. This makes them unsuitable for agile or DevOps environments.

Explore Further: AI Agents Vs. Traditional Automation: A Detailed Comparison

The Role of AI Agents in Secure Coding Workflows

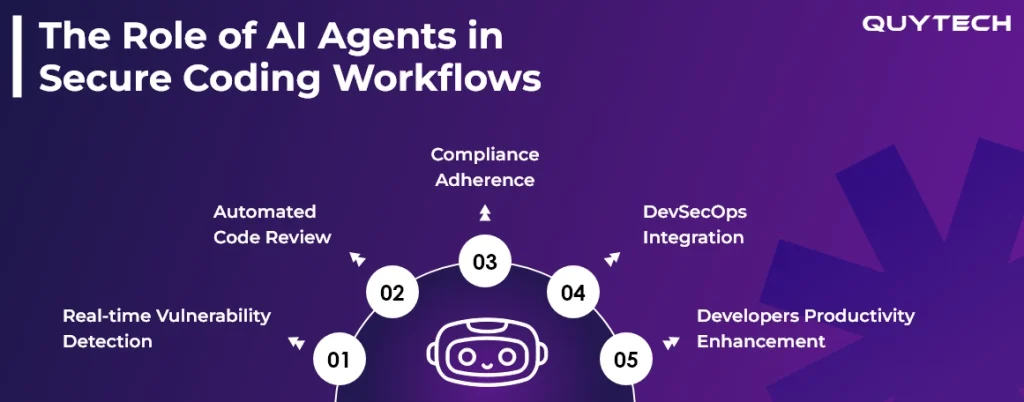

AI agents seamlessly fit into the software development lifecycle and ensure security at each milestone. They ensure code security is continuous and developer-friendly. Let’s explore further to know how:

- Real-time Vulnerability Detection

Designed for code security, intelligent agents can fit in and work within IDEs or code repositories. They can scan code while it is being written. In other words, code scanning and flagging issues happen in real-time.

- Automated Code Review

In traditional practice, a team of human professionals or testers performs code reviews after the code is completely written. However, AI agents can review pull requests for insecure and unusual patterns and compliance problems without manual intervention.

- Compliance Adherence

Whether it is basic or complex, every software development needs to adhere to industry standards such as OWASP, ISO, and SOC 2. AI agents automatically enforce policies to make sure every piece of code meets these compliance requirements and is secure.

- DevSecOps Integration

Artificial intelligence agents can integrate with CI/CD pipelines to conduct security checks during the development and deployment phases. The best part is that they don’t impact the development time.

- Developers Productivity Enhancement

AI agents accurately identify all types of security issues and also provide guided fixes along with proper explanations. By implementing an AI agent in code security, development teams can ensure complete code security while learning best practices.

Read Further: How AI Agents are Redefining Enterprise Productivity

How to Develop AI Agents for Code Security

Follow this stepwise process to build AI agents for code security:

Step 1: Define Security Objectives and Scope

The first step is to identify the core purpose of your AI agent. What type of vulnerabilities do you want it to detect, or where exactly do you want to implement it? This will provide you with clarity on the sources of data, the type of model, and the metrics for evaluation.

Step 2: Define Vulnerability Datasets

Define the vulnerability datasets; they could be GitHub, NVD, and CVE. Give a proper label to each of them based on the type of vulnerability mentioned in that. This will help you train and fine-tune your model accurately.

Step 3: Fine-Tune a Code Language Model

Rely on pretrained models such a CodeBERT, Codex, StarCoder, or any other. Fine-tune them on the labeled datasets. This will ensure that your dataset understands vulnerability patterns, code syntax, and other security-related issues.

Step 4: Build the Agent Layer

Now, integrate the trained model inside the autonomous agent framework to make it capable of reasoning, acting, and iterating. This will enable the agent to detect vulnerabilities and suggest their fixes automatically.

Step 5: Integrate Static and Dynamic Analysis Tools

Connect the agents with CodeQL, Snyk, and other tools to make sure it has both capabilities, AI-driven reasoning as well as conventional security scanners, for higher accuracy.

Step 6: Implement Secure Feedback Loop

The next step is to ensure every suggestion that the agent provides is validated and tested by human experts. This will improve the model’s understanding with time.

Step 7: Embed the Agent into DevSecOps Pipelines

Deploy the AI agent into IDEs and CI/CD pipelines to make it capable of automatically scanning, flagging, and fixing security vulnerabilities. This all should happen before the code moves to the next stage, i.e., production.

Step 8: Ensure Explainability and Compliance

Lastly, equip the explainability module in the agent. This will tell developers why a particular security vulnerability or issue was flagged and how the agent fixed it. This ensures complete compliance with the code while building trust.

Continue Reading: How to Build an AI Agent? Top Use Cases, Benefits, and Examples

Implementing Autonomous Agents in Security? Consider These 5 Practices

For the successful implementation of intelligent agents in security, you can consider the following practices:

- Pilot Deployment

Begin small before implementing an AI agent in all your development practices. You can pick a low-risk project and define clear KPIs to track whether the agent is serving its purpose.

- Integration Strategy

Make sure the agent is compatible with current toolchains such as Git, issue trackers, IDEs, and CI/CD pipelines. Verify how the implementation is impacting build times.

- Governance and Policy Controls

Clearly mention agent permissions and human-in-the-loop approval by applying role-based access controls.

- Data Access and Security

Restrict exposure of sensitive data by enforcing least-privilege access controls and encrypting confidential logs.

- Enable Human-in-the-Loop

Don’t leave everything to AI agents, especially at an initial stage. Mandate approvals from the expert before implementing any code fix.

Apart from these, some other best practices that ensure long-term success of AI agents are implementing an accuracy and feedback loop, maintaining compliance and auditability, tracking metrics, and ensuring seamless change management.

Recommended for You: AI Agents in Customer Service: Benefits, Use Cases, Real-World Examples, and More

Types of AI Agents for Code Security

A code security AI agent can be of several types depending on the role or task it needs to perform during the SDLC (Software Development Lifecycle). Let’s understand all of them:

| Type of AI Code Security Agent | Purpose |

| Code Security Agents | Leverage pattern recognition and semantic understanding to analyze source code to identify code issues such as insecure logic, syntax flaws, or weak authentication flows. |

| Dependency Management Agents | These types of agents can identify obsolete or vulnerable libraries, recommend secure alternatives, and automate dependency updates. Suitable for reducing supply chain risks. |

| CI/CD Security Agents | These agents can fit into build and deployment pipelines. They can keep a tab on commits, builds, and releases to stop insecure code from progressing. |

| Compliance and Policy Agents | These agents automatically ensure that code practices are aligned with security guidelines and frameworks like OWASP, GDPR, HIPAA, or SOC 2. |

| Multi-Agent Orchestration Systems | Multiple agents work together to perform different tasks associated with code security. For example, one may test patches while another generates fixes. |

You Might be Interested in: Types of AI Agents: Use Cases, Benefits, and Challenges

Key Benefits of Using AI Agents in Secure Code Development

Implementing AI agents for code security offers a wide array of benefits, ranging from early vulnerability detection and context-aware insights to faster time-to-market. Read further to know how:

- Detecting Vulnerabilities at an Early Stage

Built for code security, AI agents detect vulnerabilities or code security issues in real-time. This saves effort and the cost of rework while minimizing exposure to threats.

- Quicker Remediation

AI agents eliminate the need for manual code review. They do it on their own and also suggest or even apply fixes. This speeds up the resolution time.

- Context-Aware Insights

AI agents are smart; they can understand the intent and context of the code. Having this level of expertise, agents reduce false positives and ensure only actual issues are being flagged.

- Continuous Learning and Adaptation

AI agents implemented in a software development lifecycle to verify code security can learn from new vulnerabilities. They also take into account developer feedback to safeguard against new threats.

- Faster Deployment

We have previously said that, and now, highlighting it again, AI agents can be smoothly integrated into CI/CD pipelines to make security checks in real-time and at each stage. This expedites development and deployment.

- Stronger Compliance and Governance

Code security AI agents automate code documentation and audit trails. This enables developers to build software that complies with international security standards.

You may want to read: Top 20 Use Cases of AI Agents

Technical Architecture & Capabilities of Intelligent Agents in Code Security

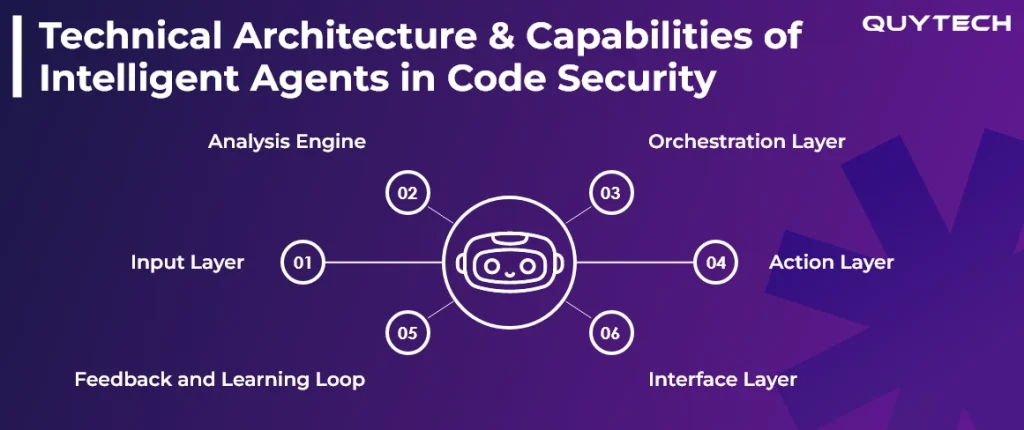

Before implementing AI agents in code security, you should know about their technical architecture and key capabilities. This section is dedicated to that only. Read further to explore how AI agents are structured:

- Input Layer

At this layer, the agent ingests source code, commit history, and dependency manifests, and threat intelligence data to be prepared for code security reviews, audits, and verifications.

- Analysis Engine

The engine can be considered the brain of the agent that utilizes static and dynamic code analysis, ML models, and knowledge graphs to identify security flaws and logic, comprehend code semantics, flag risky patterns, and define relationships between code.

- Orchestration Layer

The third layer handles different security tasks. It facilitates multi-agent collaboration so that different agents can take up different roles, from scanning vulnerabilities, managing compliance, fixing validations, and more for end-to-end code security.

- Action Layer

In this, AI agents apply fixes to the detected issues. These fixes could be patching libraries, rewriting insecure code blocks, and others. Please note that review by a human professional is a must once the agent applies fixes.

- Feedback and Learning Loop

In this layer, AI agents review feedback, test results, and new vulnerability data to retrain or self-learn. With this learning, the output of the agents becomes more accurate, and the chances of false positives are reduced.

- Interface Layer

AI agents interact with developers via IDEs, dashboards, and DevOps tools. Developers get to see the vulnerabilities, recommend fixes, and receive automated compliance reports, all happen in real-time.

Also Read: A Comprehensive Guide to AI Agents for Wealth Management

Types of Code Vulnerabilities AI Agents Can Detect

Below are the types of code security issues, flaws, or vulnerabilities that an AI agent can detect and fix.

| Code Security Vulnerabilities | Description |

| Injection Vulnerabilities | Detection of issues like SQL, command, or code injection. |

| Authentication and Authorization Flaws | Identification of weak password implementations, missing access controls, or privilege escalation risks. |

| Insecure API Usage | Flagging of unsafe API endpoints, improper input validation, or exposed tokens and keys. |

| Dependency and Supply Chain Risks | Monitoring of third-party packages, libraries, and open-source dependencies. |

| Data Exposure and Leakage | Detection of hardcoded credentials, unsecured environment variables, or improper encryption. |

| Configuration and Deployment Errors | Marking of insecure cloud configurations, missing HTTPS enforcement, or mismanaged secrets in CI/CD pipelines. |

| Buffer Overflow and Memory Corruption | Identification of unsafe memory operations that may lead to crashes or exploit execution. |

| Cross-Site Request Forgery (CSRF) | Detection of issues in web applications that allow attackers to inject malicious scripts. |

| Logic and Semantic Vulnerabilities | Finding hidden flaws, like bypassing payment validation or skipping security checks. |

| Compliance Violations | Identification of deviations from security frameworks. |

Discover More: AI Agents for Enterprise Workflow Automation: Use Cases, Benefits, Examples, and More

Real-World Examples of AI Agents in Code Security

By now, you must have an idea of how AI agents can do wonders in code security. There are multiple organizations worldwide that have already implemented it and are reaping the immense benefits of these intelligent agents. Let’s check them out:

- Google DeepMind’s Codemender

The world-renowned company has been using Codemender, which automatically detects code vulnerabilities. It is also capable of rewriting insecure code and independently validating fixes to enhance security and improve developer productivity.

- GitHub’s CodeQL with AI-Assisted Scanning

The company has integrated an intelligent pattern recognition into its static analysis system to find even new vulnerabilities like zero-day and hidden logic flaws across a vast codebase. CodeQL can even suggest query optimizations and auto-generate new security partners.

- Google’s OSS-Fuzz with Machine Learning Agents

Google is also using an AI agent to guide fuzz testing and identify weak code paths in open-source software. The smart agents can analyze crash data, predict untested areas, and adjust other critical patterns to identify vulnerabilities.

Challenges and Risks of Using AI Agents for Securing Codes

Implementing AI agents for code security comes with various challenges that can be overcome by choosing the right approach.

- False Positives and Negatives: This challenge can be overcome by merging AI-driven scans with human monitoring and review.

- Overreliance on Automation: Mitigate this problem by maintaining a human-in-the-loop model in which an expert will review and approve changes made by the agent.

- Limited Context Awareness: To overcome this issue, configure agents with contextual metadata and blend them with domain-aware pipelines.

- Data Privacy and Security Concerns: Implement least-privilege access and ensure code compliance with security standards and regulations.

- Training Data Limitations: Keep updating the code repositories and databases for continuous model training.

- Integration Complexities: Perform phased integration using andbox texting before deploying the software.

Future Trends in AI Agents for Code Security

If you are planning to build and implement AI agents in IDEs to ensure code security, you should certainly consider these future trends:

- In the upcoming times, we may see an inclination towards contextual and intent-aware agents that will go beyond pattern recognition to comprehend developer intent and application logic.

- The next trend in AI code security agents is self-learning and continual adaptation. Besides developer feedback and code repositories, intelligent agents will also auto-learn from real-world cyber attacks.

- Multi-agent collaboration will become more common in 2026 and beyond. This will distribute the code security reviews, testing, and compliance verification tasks to different agents for more efficient and highly scalable security workflows.

- Integration with autonomous DevSecOps pipelines may also become a common practice to shorten remediation cycles.

- Emphasis on explainable and transparent AI models to offer human-readable justifications of the issues is also expected to become a trend in AI agents for code security.

- In 2026 and beyond, we may also see a focus on proactive threat prediction to prevent potential security vulnerabilities.

- We may witness an increased focus on enhancing data privacy and on-device data analysis for real-time guidance within development environments.

- The rise of cross-language and framework intelligence and integration with AI-powered code generation tools may also become a prominent part of future trends in code security.

Interesting Read: Top 10 AI Agents to Automate Workflows

Build Powerful AI Agents for Code Security with Quytech

Quytech is a trusted AI agent development company that can build all types of agents. We have dedicated experts with in-depth knowledge and hands-on experience in cybersecurity, generative AI, LLM, Agentic AI, machine learning, and other technologies needed to develop powerful AI agents for diverse use cases.

We can build code-secure AI agents that can be trained on your proprietary codebases, security policies, and programming environments. These agents offer LLM-powered vulnerability detection and have adaptive learning capabilities. Our experts design these agents using explainable AI principles.

You can partner with us to build AI-powered static and dynamic code analysis tools, autonomous code remediation agents, security audit and compliance assistants, and other types of AI agents to safeguard your software code.

Conclusion

AI agents offer a proactive vulnerability management approach that ensures complete code security. They work autonomously within integrated development environments to scan code in real time and detect and fix security flaws.

Not just code security, the agents also enable developers to write clean and compliant code at every step of the software development process. They move code security towards an intelligent, adaptive, and highly intelligent future.

FAQ

AI agents are designed to enhance the capabilities and productivity of developers. These agents can definitely assess code security with scanning, identifying, and fixing issues; however, they need human judgment to ensure that the action performed is right.

AI agents have built-in mechanisms for self-learning on new vulnerability data and feedback from developers. The agents can continuously find new vulnerabilities.

AI agent development can be done in different languages, such as Java, Python, and JavaScript. Some suitable development frameworks are Spring, React, and Node.

An AI code security agent’s ROI can be tracked using the following metrics:

– Reduction in MTTD and MTTR

– Number of high-impact vulnerabilities identified before software release

– Decrease in the number of false positives

– Improved developer productivity

Intelligent agents can have diverse applications in code security. They can be used in:

– Secure Software Development Lifecycle (SDLC)

– Automated Code Review and Approval

– Continuous Monitoring in CI/CD Pipelines

– Threat Detection and Remediation in Legacy Codebases

– Policy Enforcement and Compliance Validation

– Incident Response Acceleration