Key Takeaways:

- Multimodal AI is an AI technology that works with different types of inputs to deliver meaningful outputs.

- The core modalities of multimodal AI include text, images, audio, video, speech, documents, and sensor data.

- Multimodal AI works by acquiring diverse inputs, processing them, integrating them into a unified context, and generating accurate, context-aware responses.

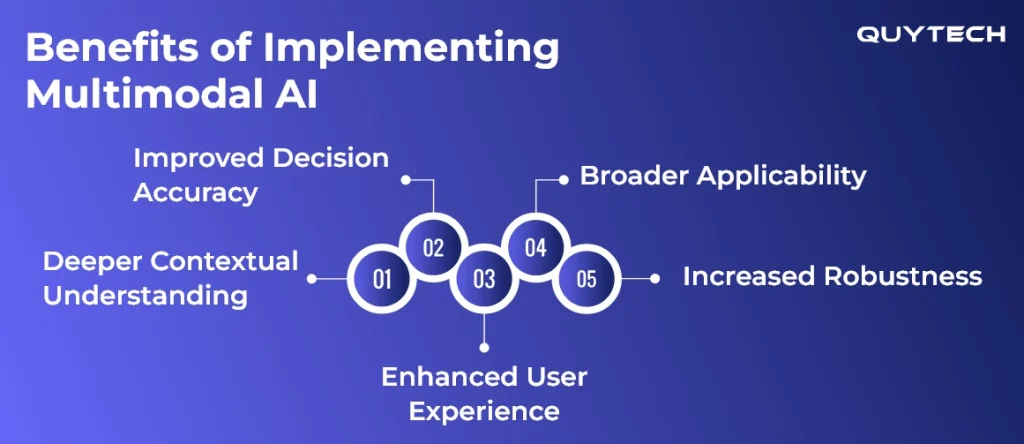

- It enhances context understanding, decision accuracy, user experience, robustness, and supports broader applicability.

- Emerging trends of multimodal AI are real-time modal processing, ethical AI, and sustainability integration.

Have you ever wondered how modern AI systems are capable of understanding varied data inputs while processing responses at the same time? The tech advancement backing this ability of modern AI systems is none other than ‘multimodal AI’. Powered by advanced technologies like large language models, computer vision, speech recognition, etc., multimodal AI helps modern AI systems in forming a realistic perspective based on the input provided by users.

Multimodal AI can be seen as the eyes, ears, and brain of an AI system. It helps AI in understanding the context of a situation. With the help of multiple processing engines, it can process various modalities to understand the visual context, audio context, and textual context. This capability of processing and interrelating varied modalities helps multimodal AI create an accurate perspective. Sounds fascinating, right? Well, there’s a lot more to multimodal AI than this.

In this blog, we will walk you through everything from the basic concept of multimodal AI to its benefits, use cases, and future trends.

What is Multimodal AI

Multimodal AI is an advanced capability that supports AI in taking inputs, understanding them, and providing relevant output. You might be thinking that this is something all AI models are capable of, and that’s true, so what makes multimodal AI different?

What differentiates multimodal AI is its capability to accept inputs in multiple modalities. Unlike unimodal and cross-modal systems, it is not limited to single inputs like text or voice; it goes way beyond that. Multimodal AI supports textual, visual, audio, document, and spoken input. It provides users with a unified space where they can give these inputs in multiple forms as well as pair them together, like providing a PDF document and textual prompts for responses.

Multimodal AI is powered by advanced technologies, such as large language models, computer vision, speech recognition, and multimodal embedding models. All these technologies help multimodal AI understand the interconnections between different model inputs and give relevancy-rich responses. Common examples of multimodal AI are the current AI assistants like ChatGPT, Google Gemini, to which users can give videos, images, voice notes, etc, altogether to get relevant responses.

What Led to the Rise of Multimodal AI

What led to the rise of multimodal AI in the technological landscape was the fact that the information and data present in the real world are not limited to only textual or speech modalities. The real-world data varies in modality. It is unstructured, structured, textual, visual, and much more.

Since the real-world data has multiple modalities, the traditional unimodal AI systems were not capable of handling them. Apart from this, the diverse digital world also does not deal with only single modal data. People communicate through texts, images, videos, voice notes, and more. Processing all this data with traditional unimodal AI is quite time-consuming.

A unimodal AI system can only process a single modal type at a time, which is not enough considering the amount of data that gets generated regularly. This limitation led to a series of digital evolutions, eventually paving the way for multimodal AI in this landscape. It enabled the processing of multiple data modalities at the same time, allowing data to be analyzed as it is generated.

Core Modalities in Multimodal AI Systems

Now, let’s take a look at the core modalities that a multimodal AI system works on. These modalities can be classified into:

Text

Text refers to the most basic prompt that a user inputs to get a desirable response. It is one of the most important modalities and is most commonly used in multimodal prompts. Text includes articles, messages, documents, etc. Text helps in conveying the intent, logic, and context of data.

Images

Images refer to visual forms of data like pictures, diagrams, charts, graphics, etc. Images help artificial intelligence in understanding the data and deriving meaning and context from it. Images support visual clarity, making AI capable of recognizing elements present in the data and how their interrelationships,

Audio

Audio includes data represented in sounds, like human speech, music, noise, etc. Audio modality helps artificial intelligence in capturing audio data and deriving the context in it by breaking down the tone, emotions, and intent. Audio supports accurate context analysis as compared to texts because it reflects information in its purest form.

Video

Video refers to the modality that consists of a mixture of images shown over time, often blended with audio. Video modality helps AI understand the context of the data on a deeper level as it plays an event, reducing the need for AI to visualize it additionally. It helps artificial intelligence in understanding the overall changes happening over time.

Speech

Speech refers to spoken language. Although it does fall in the audio category, but is understood with different models because it is actual human language rich in emotions and tone. Speech gives a deeper understanding of the intent hidden in words. It helps AI understand raw human outputs and has a richer context compared to textual inputs.

Document

Documents include PDFs, forms, reports, invoices, etc. Documents have data in multiple modalities, like textual and visual modalities. Its structured and formatted information representation helps artificial intelligence in grasping context easily.

Sensor and Structured Data

Sensor data refers to the data that’s generated from sensory devices like GPS, cameras, etc. The data collected from sensors is raw and is collected as it is generated. This data is usually represented in numerical form.

How Multimodal AI Works

Now that you have understood the core modalities of a multimodal AI, the next question that pops up must be about the working mechanism, right? Here’s a step-by-step mechanism explaining how multimodal AI works:

Step 1: Input Acquisition

The first step in multimodal AI’s working mechanism begins with input acquisition. Here, inputs of different modalities are given to multimodal AI. These include texts, visual content like images, videos, audios, documents, etc. All these modalities offer the same information, but from a different perspective.

Step 2: Input Processing

Once all the information is acquired, the next step is to process it. Since every input provided to multimodal AI is different, processing is also different for them. The processing helps in understanding the event from different perspectives and creating an internal format from it, which will help the AI in generating a response later.

Step 3: Cross-Modal Integration & Unified Context Derivation

After processing, the next step is to integrate the information acquired to understand the interrelatedness of different inputs. This helps AI in understanding the real event based on the derivations from every input. The final perspective will help the multimodal AI in grasping the main context behind the information provided and then plan actions accordingly.

Step 5: Response Generation

After deriving a unified context, the multimodal AI starts generating relevant responses. The response is generated based on the requirements of the user. If they ask for a textual response, the response is generated accordingly. The output given by multimodal AI is highly accurate and relevant.

Interesting Read: Agentic AI in Education: The Future of Personalized and Adaptive Learning

Benefits of Implementing Multimodal AI

Implementing multimodal AI brings immense benefits. It supports better contextual understanding, decision accuracy, user experience, and much more. Let’s explore these benefits in detail:

Deeper Contextual Understanding

Unlike unimodal AI systems, multimodal AI is capable of understanding the context at a deeper level. This is because multimodal AI understands the input from multiple perspectives, which helps it in getting a deeper understanding from textual, visual, motional, etc., points of view.

Improved Decision Accuracy

As mentioned already, the perspectives from which a multimodal AI understands input are very diverse. This helps AI in grasping the context deeply. It naturally helps it in making accurate decisions, be it for deriving context or for deciding the right response.

Enhanced User Experience

Since multimodal AI can understand varied inputs, it can see what the human brain sees, listen to what they listen to, and even understand the event taking place from the input provided. This ability of multimodal AI helps it provide realistic and natural responses. The naturalness of responses enhances user experience.

Broader Applicability

Multimodal AI is not limited to a certain type of data; it applies to a broad category of tasks. It can help users get desired responses for different inputs all in one place. It eliminates the need for jumping from one system to another for processing different modal inputs.

Increased Robustness

As multimodal AI makes use of multiple processing systems to process different modal inputs, the robustness of the AI model increases. This is because even if one process fails, like audio processing failure because of noise, the AI model will still give accurate results because other modal inputs will still be processed.

Similar Read: AI Companion Development Guide: Key Benefits, Use Cases, and Real-World Examples

Real-World Applications of Multimodal AI Across Industries

Understanding a concept without understanding its practical application can be quite challenging, right? But not anymore! Here’s a dedicated section consisting of some real-world applications of multimodal AI across industries:

Healthcare

In the healthcare sector, multimodal AI helps in extracting patient data through different reports like CT scans, X-rays, MRIs, doctors’ prescriptions, patient speech during checkups, etc. Multimodal AI assists the process of creating personalized treatment plans by understanding context from different reports.

Example: IBM Watson Health makes use of multimodal AI systems that process different modal inputs to diagnose diseases and offer treatment plans.

Finance & Banking

In the finance and banking sector, multimodal AI helps institutions improve security, make decisions, etc. It can help institutions in processing structured transaction data, understanding user patterns, and audio data over calls.

Example: JP Morgan Chase utilizes multi-modality data to detect fraudulent activities, risk assessment, and documentation automation.

Retail & E-Commerce

The retail and e-commerce sector makes use of multimodal AI to enhance search recommendations for the user. Multimodal AI helps users get accurate information from search options by enabling textual description search, image search, voice search, etc.

Example: Amazon uses multimodal AI to process visual, textual, and voice-based data to enrich the user shopping experience.

Customer Support & Virtual Assistance

In the customer support and virtual assistance sector, multimodal AI is used to derive information from audio messages, texts, images, etc. It helps in accurately identifying the issues faced by customers and providing optimal resolution.

Example: Google’s assistant utilizes multimodal AI to handle customer support requests by processing voice, visual, and textual inputs.

Marketing

In the marketing sector, multimodal AI helps organizations to analyze and generate content. It processes visual data like images, video, and textual data like tags, captions, audio trends, etc. This helps marketing departments in analyzing trends and timely tapping into them.

Example: Coca-Cola utilizes multimodal AI to process varied modalities of data like social media reels, images, hashtags, etc., to create engaging content and marketing campaigns.

Manufacturing

Manufacturing industries make use of multimodal AI to analyze data generated from sensors, Vireo footage, maintenance data, etc. Multimodal AI helps in accurately figuring out the need for maintenance and also supports quality checks.

Interesting Read: How Zero Trust and AI-driven Security Will Redefine Cyber Defense in 2026

Challenges and Limitations of Multimodal AI

While multimodal AI brings in immense benefits for numerous industries, its implementation brings its fair share of challenges as well. Like any other technology, multimodal AI also has its limitations. Here are some challenges and limitations of multimodal AI:

Complexity of Model Design

Unlike traditional AI models, multimodal AI can process multiple modalities at one time. This naturally adds complexity to its design, as to process different modalities, the AI model would follow multiple processing techniques. Designing a system so complex can be challenging technically.

High Data Requirements

Multimodal AI requires large amounts of properly aligned data to effectively process modalities. The unavailability of large data sets, that too properly aligned ones, can affect the training of the AI model and can increase costs as well.

High Computational Costs

Since multimodal AI functions across multiple modalities, the computational requirements also increase. And with this increase in computational requirements, the costs associated with them rise naturally, making organizations hesitant towards multimodal AI adoption.

The Future of Multimodal AI

Multimodal AI is not a trend of the day; it is here to stay, evolve, and expand across industries. Its future awaits transformation by blending multimodal AI with real-time mechanisms, ethical AI integration, and sustainability adoption. Let’s study these trends in detail:

Real-Time Modal Processing

While the current capability of multimodal AI in processing multiple inputs is quite impressive, in the future, it will be able to compute even faster and give outputs in real-time. This will speed up the process of analyzing multimodality data, enhancing the responsiveness of AI models.

Ethical AI Integration

The future holds ethical AI integration for multimodal AI. This will create ethical rules, ensure regulatory compliance, and also make sure that all the data being processed is not utilized for unethical purposes. Ethical AI integration will add responsibility, explainability, and accountability factors in multimodal AI.

Sustainability Adoption

Sustainability adoption in multimodal AI will help organizations expand without impacting the environment negatively. It will ensure that the organizations, applications, and systems utilizing multimodal AI conduct their activities without draining resources excessively. It will create a balance in technological innovation and sustainable development.

Final Thoughts

As the need for faster, smarter, and capable AI models increases, multimodal AI is emerging as the key solution to tackle this demand. With an ability to handle multiple modalities of inputs like texts, audio, video, images, documents, etc., multimodal AI is not just introducing transformation, but is driving it.

Backed by advanced technologies like deep learning, NLP, computer vision, and much more, multimodal AI makes applications capable of processing varied inputs simultaneously and providing accurate and natural responses. With benefits ranging from deep contextual understanding and decision accuracy to enhanced user experience and robustness, multimodal AI is not a wave of trend but a futuristic advancement in artificial intelligence.

FAQs

Not necessarily. Multimodal AI does not need all the modalities; it can function in a few or even support single modality processing.

While multimodal AI works better in large data sets, it can still work with fewer datasets. However, the accuracy of processing inputs will depend on the data in case of low-data environments, as multimodal AI won’t have more data to access to ensure accuracy.

Yes, multimodal AI is suitable for small businesses, but the cost of implementing it can be a bit high in the case of limited-budget organizations.

Yes, multimodal AI is more accurate than traditional AI because it processes multiple inputs, which helps it understand the context behind the data from multiple perspectives.

The security of a multimodal AI is directly related to how the data is collected, stored, and processed. Integrating strict compliance models can strengthen the security of multimodal AI.

Yes, multimodal AI solutions can be integrated into existing systems by using APIs.

Multimodal AI can understand the context from multiple perspectives by processing multiple inputs. This helps compensate for noise or gaps in any single data source.

No, multimodal AI does not replace human decision-making. Instead, it helps in enhancing human decision-making in critical domains.